AI has a certain style of writing. We can’t always put our fingers on it, but we can often spot the influence of AI in written communication.

AI has a certain style of writing. We can’t always put our fingers on it, but we can often spot the influence of AI in written communication.

I recently had a discussion with a friend who is an engineering professor, and I can see their concern as it relates to AI. On the one hand, you want students to develop certain skills. On the other hand, you don’t want to create another “You’ll never have a calculator in your pocket” scenario that everyone will call up as a failure of your course for years to come.

How to find the right balance, I’m sure, is a constantly evolving consideration for people involved in all phases of education. When to adopt the abacus, TI-82, Chromebook, or AI? In business and casual situations we find ourselves wondering the same thing: “Did a person write this?”

A co-worker recently sent me this paper, which takes a statistics based look at detecting AI writing style. The authors analyzed more than 15 million biomed abstracts to uncover if LLMs have affected the writing style and vocabulary of the collected mass of scientific papers. One of the key metrics was the use of what they called “excess” words, which are words that AI uses more often than humans and contribute more to style than content.

Their research found that the rise of large language models (LLMs) has been accompanied by a noticeable uptick in specific stylistic word  choices in academic writing. The findings indicate that at least 13.5% of scholarly articles published in 2024 involved some level of LLM assistance.

choices in academic writing. The findings indicate that at least 13.5% of scholarly articles published in 2024 involved some level of LLM assistance.

The approach they took was to compare “pre-LLM” papers to papers written after the increase in popularity of chatbots like ChatGPT. In this way, they were able to compare papers that were known to be generated by humans with papers that may have had AI influence. They were then able to identify various elements that became more common as the availability of AI increased.

The authors were not attempting to create an AI detector, and note the limitations of their approach, as it requires known-human and known-AI samples. Still, the ideas and research from this paper suggested to me that it might be possible to create a tool that could detect common AI speech patters in a given text sample.

AI will continue to evolve as a tool, so we can’t just ban it everywhere. Perhaps it would be beneficial to have a way to determine if something might be AI. If I were a professor, I could see myself wanting something to back up my “gut instinct.” If some piece of writing “feels” like its AI, I might like to have a 3rd party suggest WHY it does or does not show signs of potential AI influence.

I decided to write a tool to apply this and other research towards that goal, but restricted myself to a standalone script without the use of API or LLM to make it totally portable and shareable. Announcing the AIStyleScan Project! Download the code from Github, or DEMO it here.

Read on to learn how it works!

Demo this tool, AIStyleScan here!

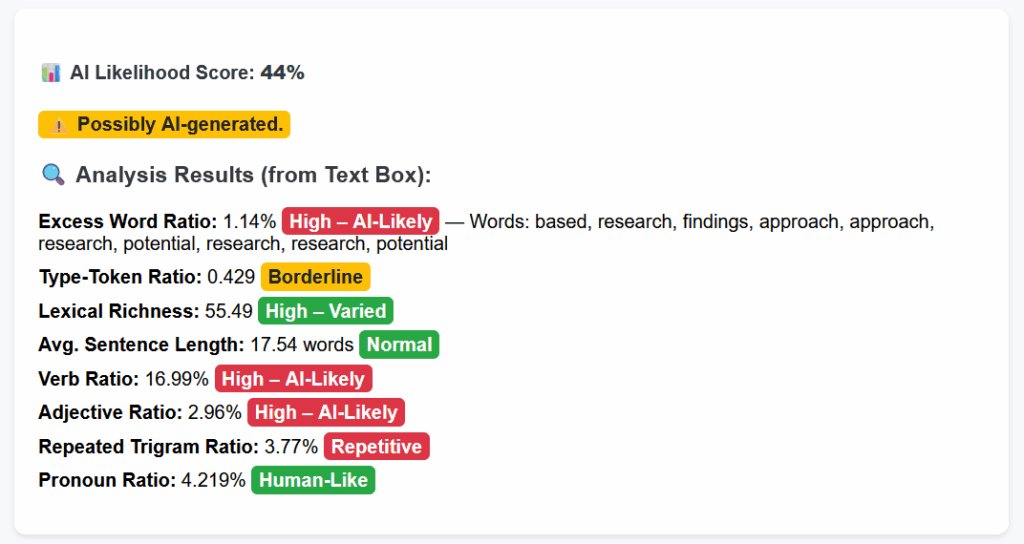

Here are the “tests” that I implemented in this first release:

- Excess Word Ratio: Flags overused, formalized vocabulary common in LLMs.

- Type-Token Ratio: Measures diversity of word use. Lower = more repetition, which is more AI like.

- Lexical Richness: Ratio of unique words adjusted by length (entropy-like).

- Average Sentence Length: AI often favors longer, clause-heavy sentences.

- Verb / Adjective Ratio: AI tends to overuse action and descriptive words.

- Trigram Repetition: Repeating the same 3-word patterns is typical in AI writing.

- Pronoun Ratio: Human text often includes “I”, “we”, etc. AI text less so.

The Excess Word Ratio test is the one that is most focused on by the research paper that inspired this effort. To that end, I created a list of 300+ “excess words” that represents a curated portion of the words in the study. I compare them against the sample text as a ratio. This test has the highest weighting in my calculations.

The other tests came from various sources as solid indicators of potential AI influence. I’ve spent some time tuning the weighting and thresholds and think I have it in a reasonable place, but these are all at the top of the script so that you can tune your own.

I’ve released the code here under the MIT license: https://github.com/RickGouin/AIStyleScan

This is an Arms Race

Does this tool reliably detect AI? Not really.

Does this tool reliably detect common patterns of speech that AI often uses? Definitely.

After building this tool, I now see a sort of futility in attempting to detect AI influence. This tool cannot, on its own, determine for certain if something was written by AI or not. One of the strongest indicators of AI influence that I could implement in a standalone script was the frequency of “excess words”. How long will it take before the words in this list are de-emphasized in the various major LLMs? It may have already begun. I don’t think any static suite of tests will work in the long run. This begins an ongoing effort to stay one step ahead.

This whole effort becomes a race to identify new ways of distinguishing AI generated writing/speech/images, while the various companies behind these products attempt to make them more and more human like. Its a race that I’m not sure I want to join in earnest.

This whole effort becomes a race to identify new ways of distinguishing AI generated writing/speech/images, while the various companies behind these products attempt to make them more and more human like. Its a race that I’m not sure I want to join in earnest.

I could definitely build a more effective tool if I opened myself up to using some ML and LLMs to measure things like perplexity. I think this sort of analysis is the only hope for any long term solution to detecting AI generated content. My goal here, though, was to create a standalone script using static statistical analysis.

In the end, you may find this script useful in identifying WHY a certain piece of text feels like AI to you. It should in no way be used as the sole arbiter of whether or not something was written by AI.

Want to try the tool out? You can play with it here: https://www.rickgouin.com/ai-scan/

This entire article was written manually, one keystroke at a time, by me.

What do you think? Let me know in the comments below.

[…] to see if I could discern LLM and Human created content. The genesis of that tool was described here. During that project, I learned a lot about what distinguishes human created text from LLM […]